Recent Posts

Stay Updated

Subscribe to our newsletter for the latest articles, and updates in web development.

Most Liked

Explore the most loved tutorials and articles by our community.

Subscribe to our newsletter for the latest articles, and updates in web development.

Explore the most loved tutorials and articles by our community.

We’ve all seen it: a beautifully designed React app that breaks the moment you hit "Refresh." Or a search result page that, when shared with a colleague, leads them to a blank "No Results Found" screen because the search state was tucked away in a local useState hook instead of the URL.

Let’s be honest: setting up authentication is usually the part of a project we dread most. Between handling session cookies, managing OAuth callbacks, and keeping your database schemas in sync, it’s easy to lose days to "auth hell."

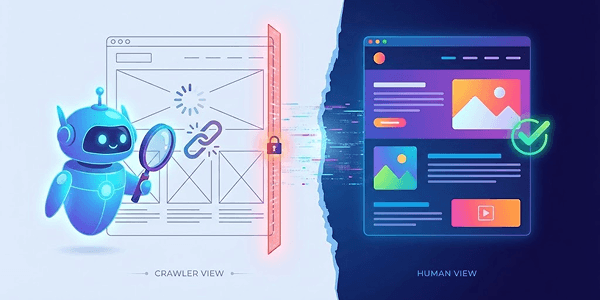

It is one thing to build a website; it is an entirely different beast to get it "Google-approved." You spend weeks, maybe months, migrating your old website to a shiny new tech stack. You move from a basic static site generator to something powerful like Next.js or Remix. The new site is faster, slicker, and objectively better.

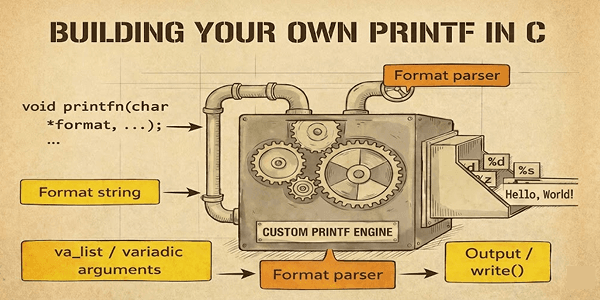

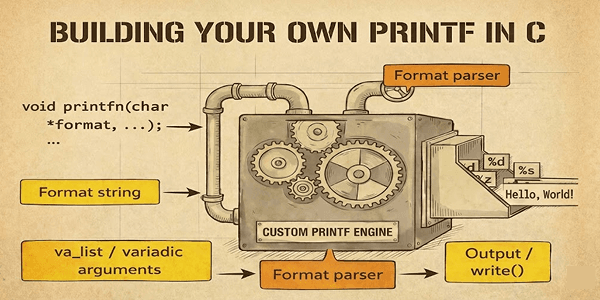

Re-implementing `printf` is one of those C projects that forces you to get serious about parsing, variadic functions, formatting rules, and memory discipline. But before any of that, the real foundation is the project structure—because good structure makes your code easier to extend, test, and ship as a reusable library.

C is a versatile language: it can be used to perform many functions. The standard C library comes with a bunch of functions in stdio.h a header file that helps output information to the terminal, string buffer, or file stream.

Most tutorials or documentation will suggest using "admin" as the username and a blank password to log in to Mikrotik for the first time. In some cases, it will succeed, but recently, you might receive a "username or password wrong" error with an empty password on a brand-new MikroTik router; it is likely because newer models no longer use a blank default password.

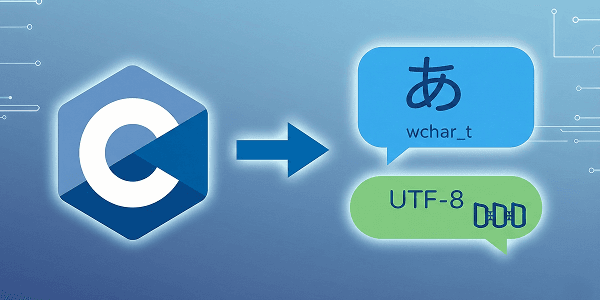

In the early stages of programming, different regions and their own standards for representing characters used in their writing systems, i.e., they had their own encoding systems. Some like the most common ASCII fit their entire characters with a positive signed single byte (0-127) effectively, while others utilize an unsigned char byte (0-255) to represent their characters, among other encoding schemes (known as code pages, extended ASCII, or platform-specific encodings like Shift-JIS, EUC-KR, GB2312, and Big5). However, this resulted in conflict, as text in one encoding might be misinterpreted in another, described as "garbled text," "mojibake," or the "encoding nightmare." What exacerbated the issues was that the encoding was implemented not only in programming language compilers/editors but also in the OS and display servers/terminals.

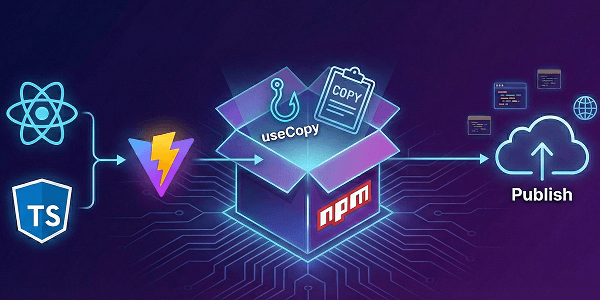

Publishing your own React hook to npm is a massive milestone. It allows you to reuse your code across projects and share your work with the global developer community.

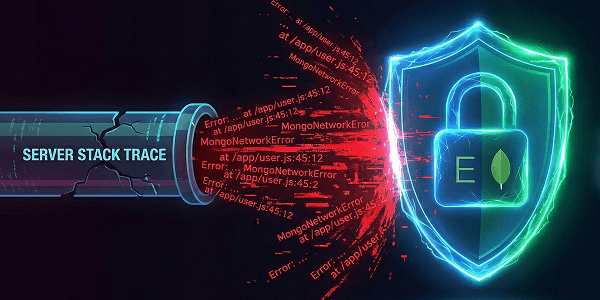

If you have been writing Node.js and Express applications for a while, you have probably written a controller that looks like this:

Building with Next.js is an absolute joy. The routing is snappy (especially with the new App Router), the components are modular, and the developer experience is top-tier. But eventually, every serious application hits the same wall: Where do I store the data?